The computing landscape is undergoing a profound transformation, driven by the integration of artificial intelligence directly into personal devices. As AI-powered PCs, equipped with dedicated Neural Processing Units (NPUs), increasingly hit the market, a critical question arises: Are we truly entering a revolutionary new era of personal computing, or are these powerful devices simply ushering in a more complex frontier of privacy concerns? This article delves into the dual nature of this technological shift, exploring both the immense potential NPUs offer for enhanced user experiences and the inherent risks they pose regarding personal data and digital autonomy. Understanding this balance is crucial for users, developers, and policymakers alike as we navigate the future of our digital lives.

The promise of on-device AI

The advent of AI-powered PCs, featuring integrated NPUs, represents a significant leap from traditional computing paradigms. An NPU is a specialized processor designed to efficiently handle the mathematical operations fundamental to artificial intelligence workloads. Unlike cloud-based AI, which requires constant internet connectivity to send and receive data from remote servers, on-device AI processes information directly on your personal computer. This local processing brings a multitude of benefits, including vastly improved speed and responsiveness for AI-driven tasks, reduced latency, and greater energy efficiency. Imagine real-time language translation without internet delays, sophisticated image and video editing that leverages AI to automate complex tasks, or enhanced security features that can detect anomalies in your system behavior locally, all without sending your sensitive data off-device.

Furthermore, on-device AI fosters new possibilities for personalized experiences. From predictive text that truly understands your writing style to intelligent assistants that learn your routines and preferences without transmitting them to external servers, the potential for seamless, tailored interactions is immense. Creative professionals can benefit from AI-powered tools that generate content, upscale images, or even compose music in real time, directly on their workstation. This move towards localized AI is not just about convenience; it promises a more robust, reliable, and potentially more private computing experience by keeping data closer to its origin point.

The unseen hand of data processing

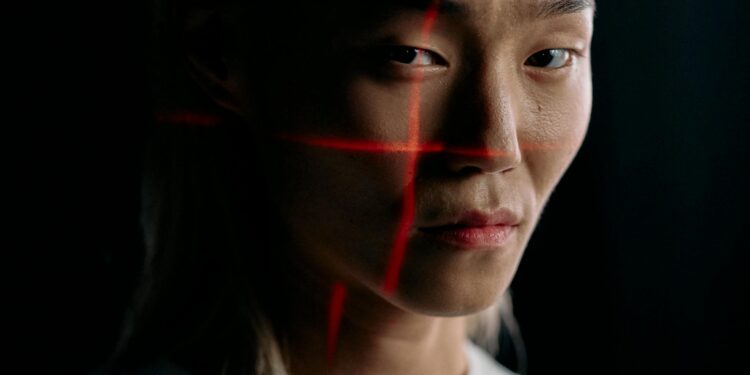

While the promise of on-device processing sounds inherently private, the reality is more nuanced. Even when data remains on your device, its processing by AI models introduces a new layer of complexity regarding privacy. NPUs accelerate the analysis of vast amounts of local data – your documents, photos, voice recordings, browsing habits, and even biometric information. The critical question isn’t whether this data leaves your device, but rather *how* it is processed, interpreted, and potentially used by the operating system or applications that leverage the NPU.

Consider the sophisticated telemetry systems integrated into modern operating systems. Even if an AI feature like smart search or content recommendations operates locally, the metadata or aggregated insights derived from its activity might still be collected and transmitted to the manufacturer for product improvement or feature refinement. This ‘anonymized’ data, while not directly identifiable, can still paint a detailed picture of user behavior when combined with other data points. Moreover, the very nature of AI involves pattern recognition, and these patterns, derived from your personal data, could potentially be used to infer sensitive information about your habits, preferences, or even health. Without clear transparency and robust user controls, the deep, continuous analysis of personal data by on-device AI could become an invisible hand, shaping user experiences in ways that are not always clear or consented to, creating a subtle but pervasive privacy challenge.

Navigating the privacy paradox

The inherent tension between the convenience and power of on-device AI and the imperative for personal privacy presents a significant “privacy paradox.” Users are drawn to the enhanced capabilities AI offers, yet they rightly hesitate when those capabilities come at the expense of their data autonomy. Navigating this paradox requires a multi-faceted approach involving technology, policy, and user education.

Manufacturers and software developers bear a primary responsibility to implement privacy-by-design principles. This means building AI features with data minimization as a core tenet, providing granular user controls for data processing, and offering clear, understandable explanations of how local AI features utilize personal information. Opt-in consent for sensitive data processing, rather than opt-out defaults, should become the standard. Furthermore, the industry must explore and adopt privacy-enhancing technologies, such as federated learning, where AI models are trained on decentralized datasets without individual data ever leaving the user’s device, or differential privacy, which adds noise to data to protect individual identities while still allowing for aggregate insights.

On the regulatory front, existing frameworks like GDPR and CCPA provide foundational principles for data protection, but their application to deeply embedded, on-device AI requires careful interpretation and potentially new guidelines. For users, empowerment comes through education. Understanding what data AI features access, how to manage permissions, and recognizing the trade-offs involved in enabling certain functionalities are crucial steps towards reclaiming digital autonomy in the age of AI PCs. The table below illustrates some of the considerations:

| Aspect | Benefits of NPU PCs | Potential Privacy Risks |

|---|---|---|

| Data Processing | Faster, offline, lower latency | Continuous local data analysis, potential for inference |

| Performance | Real-time AI features, increased efficiency | Subtle collection of telemetry/usage patterns |

| User Experience | Highly personalized, intuitive interactions | “Black box” AI decisions, algorithmic bias from data |

| Security | Enhanced on-device threat detection | Risk of sensitive data exposure through bugs or malicious apps |

Beyond the hype: Practical implications and future outlook

Beyond the marketing hype, AI-powered PCs with NPUs are undeniably ushering in a new era of personal computing. They promise to transform how we interact with our devices, making them more intelligent, responsive, and tailored to our individual needs. The shift from cloud-centric AI to on-device processing is a fundamental architectural change that can deliver tangible benefits in terms of performance, reliability, and potentially, privacy by reducing reliance on external servers.

However, this new frontier is inextricably linked with a novel set of privacy challenges. The mere fact that data is processed locally does not automatically equate to privacy. The depth of analysis performed by on-device AI, the potential for inferences to be drawn from behavioral data, and the methods by which these insights might be shared or leveraged by operating system and software developers, all warrant close scrutiny. The future success and ethical integration of AI PCs hinge on transparency, robust user controls, and a commitment from the industry to prioritize user privacy alongside innovation. For consumers, the choice will increasingly be about balancing the allure of advanced AI features with a critical understanding of their data implications, becoming more informed stewards of their digital footprints on these powerful new machines.

The emergence of AI-powered PCs with dedicated NPUs undeniably marks a significant inflection point in personal computing. They offer a compelling vision of enhanced productivity, creativity, and seamless user experiences, leveraging on-device processing to deliver speed and efficiency previously unattainable. This represents a genuine advancement, promising a more capable and responsive digital companion. Yet, as we embrace these powerful devices, we must remain acutely aware of the concurrent privacy challenges they introduce. The very proximity and continuous analysis of our personal data, even if it stays local, demands a new level of vigilance regarding data governance, user controls, and transparency from manufacturers.

Ultimately, whether AI PCs truly usher in a new era of empowerment or become a new frontier of privacy concerns rests on how we collectively navigate this intricate balance. It requires responsible development that embeds privacy-by-design, clear regulatory frameworks adapted to on-device AI, and, crucially, an educated user base capable of making informed decisions about their digital lives. The potential for innovation is immense, but it must not overshadow the fundamental right to digital autonomy and data protection in this exciting, yet complex, new chapter of personal computing.