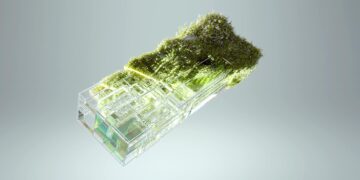

The ubiquity of artificial intelligence has transitioned from science fiction to our daily lives, embedding itself deeply into the technology we use. For years, AI resided predominantly in the cloud, processing vast amounts of data on distant servers. However, a significant shift is underway: the rise of on-device AI. This new frontier promises to bring intelligence directly to our smartphones, smartwatches, and other gadgets, enabling them to learn and adapt without constant internet connectivity. But as this technology matures, a critical question emerges: Is on-device AI truly ushering in an era of genuinely intelligent devices, capable of intuitive understanding and proactive assistance, or is it merely adding another layer of complexity, making our tech more convoluted than intelligent?

The promise of truly smart companions

The appeal of on-device AI is multi-faceted, addressing some of the most pressing concerns with cloud-dependent systems. Foremost among these is privacy. When AI processing happens locally on your device, sensitive personal data doesn’t need to be uploaded to remote servers, significantly reducing the risk of breaches or unwanted surveillance. This architectural shift empowers users with greater control over their information, fostering trust in the technology. Beyond privacy, the speed and responsiveness are dramatically enhanced. Imagine voice assistants that understand commands instantly, without the fractional delay of network round-trips, or cameras that process complex image optimizations in real-time, delivering stunning photos faster than ever before. This real-time processing capability makes devices feel more immediate and intuitive.

Furthermore, on-device AI offers robust offline functionality. Our gadgets can maintain their “intelligence” even in areas with no internet access, from remote hiking trails to international flights. This ensures continuous, reliable performance, regardless of connectivity. Personalization also receives a significant boost; local AI can learn individual user habits, preferences, and patterns over time, tailoring experiences uniquely to each person without requiring their data to leave the device. From adaptive battery management to predictive text that truly understands your unique vocabulary, the goal is to make our devices feel like truly intelligent extensions of ourselves, anticipating our needs rather than just reacting to commands.

How edge intelligence reshapes device functionality

Understanding how on-device AI works helps demystify its capabilities and limitations. Unlike traditional cloud AI, which relies on powerful, centralized data centers, on-device AI operates at the “edge” – directly on the device itself. This is often facilitated by specialized hardware components known as Neural Processing Units (NPUs) or AI accelerators. These dedicated chips are designed to efficiently handle the mathematical computations required for machine learning models, such as neural networks, with far greater energy efficiency than general-purpose CPUs or GPUs.

When you take a photo, for instance, an NPU might immediately analyze the scene, identify objects, adjust exposure, and enhance colors based on a pre-trained model stored locally. Similarly, voice recognition models can run entirely on your phone, converting speech to text without sending a single audio clip to the cloud. This approach not only provides the benefits of privacy and speed but also reduces the computational load on the device’s main processor, leading to better battery life and overall performance. The intelligence isn’t about the device thinking like a human, but rather executing complex, pre-programmed AI models with high efficiency and without external dependencies, making its responses seamless and immediate to the user.

The double-edged sword: complexity vs. capability

While the promises of on-device AI are compelling, its implementation isn’t without its challenges, which can sometimes lead to increased complexity rather than pure intelligence. Developing and deploying effective AI models on resource-constrained devices requires significant optimization. Models must be “pruned” or compressed to fit within limited memory and processing power, which can sometimes compromise their accuracy or breadth of understanding compared to their cloud-based counterparts. This can lead to a device that is ‘smart’ in specific, narrow applications, but struggles with more generalized intelligence, creating a user experience that feels less genuinely intelligent and more like a collection of specialized tricks.

Moreover, the fragmentation across different device manufacturers and their proprietary NPUs can complicate development, leading to inconsistencies in AI performance and features. Users might find that a feature works brilliantly on one brand’s phone but is absent or less effective on another. Power consumption is another consideration; while NPUs are efficient, running complex AI models continuously can still drain battery life, forcing developers to strike a delicate balance between persistent intelligence and device endurance. This often means that some “intelligent” features are only intermittently active or require user activation, detracting from a truly autonomous intelligent experience. The underlying question remains: are we building genuinely adaptable intelligence, or just highly optimized, complicated automation?

| Feature | On-Device AI | Cloud AI |

|---|---|---|

| Privacy | Enhanced (data stays local) | Dependent on cloud provider policies |

| Speed/Latency | Near-instantaneous, no network delay | Dependent on network speed and server load |

| Offline Capability | Full functionality without internet | Limited or none without internet |

| Power Usage | Can be higher for complex, continuous tasks on device | Lower device power, higher server power |

| Model Complexity | Limited by device hardware and memory | Vastly scalable to extremely large models |

| Data Access | Processes local, personal data effectively | Accesses vast, centralized datasets for training |

| User Experience | Responsive, personalized, secure | Can feel less immediate, data privacy concerns |

Finding the balance: intelligence through thoughtful integration

Ultimately, the journey towards truly intelligent gadgets isn’t about choosing exclusively between on-device or cloud AI, but rather finding a synergistic balance. The future likely lies in a hybrid approach, where on-device AI handles immediate, privacy-sensitive tasks, while cloud AI provides the heavy lifting for tasks requiring vast datasets, real-time updates, or immense computational power, like complex generative AI models. This distributed intelligence allows devices to leverage the strengths of both environments, creating a more robust and adaptable system.

The key to making gadgets truly intelligent, rather than just more complicated, lies in thoughtful integration and user-centric design. Developers must focus on building AI features that genuinely enhance the user experience, solving real problems without adding unnecessary layers of interaction. Intuitive interfaces, clear feedback, and transparent control over AI functionalities will be paramount. When AI operates subtly in the background, making our devices perform better, faster, and more securely, without demanding our constant attention or understanding of its inner workings, that’s when we truly approach intelligence. It’s about empowering the user, not overwhelming them with technological prowess.

In conclusion, the advent of on-device AI represents a significant leap forward in our quest for smarter technology. It undeniably enhances our gadgets in crucial ways, offering substantial improvements in privacy, speed, offline capabilities, and personalized experiences. Features like real-time image processing, secure facial recognition, and context-aware notifications are tangible examples of intelligence moving closer to the user. However, this progress isn’t without its trade-offs, introducing new complexities related to hardware optimization, power management, and the inherent limitations of running sophisticated AI models on constrained devices. The challenge lies in navigating these complexities to ensure that the added intelligence genuinely translates into a more intuitive and beneficial user experience, rather than just a collection of technically impressive but ultimately overwhelming features. As we move forward, the most intelligent gadgets will be those that master the art of blending on-device prowess with strategic cloud support, making technology seamlessly serve us without demanding an advanced degree in AI to operate it.