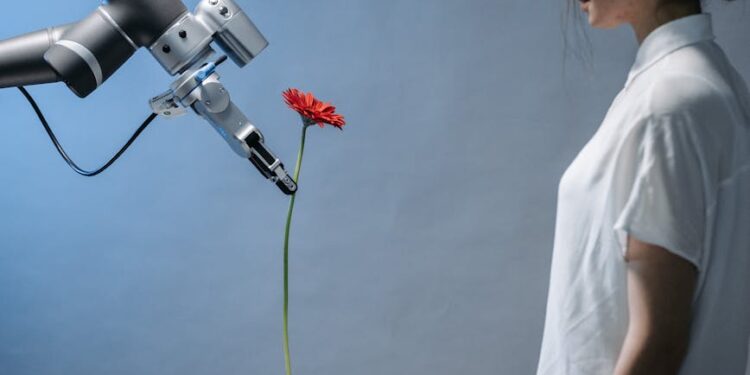

The boundary between science fiction and tangible reality is blurring at an accelerating pace, especially in the realm of artificial intelligence. For decades, we’ve envisioned sophisticated AI companions capable of truly understanding us, not just processing commands. That vision, once confined to cinematic screens and futuristic novels, is now becoming the tech world’s most feverish new frontier. We are entering an era where AI can transcend mere textual or auditory interaction, evolving into multimodal entities that can see our expressions, hear the nuances in our voice, and consequently, *understand* us in a deeply contextual and real-time manner. This profound shift from transactional AI to genuinely personal companions represents a monumental leap, promising to redefine our relationship with technology and fundamentally reshape daily life.

Beyond text and voice: The multimodal leap

For years, our interaction with artificial intelligence has largely been constrained by its input mechanisms. Whether typing queries into a search bar or issuing voice commands to a smart speaker, these interactions have predominantly beenunimodal—relying on a single form of data input. While incredibly useful, this approach inherently limits AI’s ability to grasp the full spectrum of human communication, which is inherently rich and multifaceted. The advent of multimodal AI shatters these limitations by integrating diverse data streams: visual (through computer vision), auditory (speech recognition and sound analysis), and linguistic (natural language processing).

Imagine an AI that not only hears your words but also sees your furrowed brow, observes your body language, and detects the subtle shifts in your tone. This confluence of sensory data provides an unparalleled depth of context. An AI capable of recognizing that your “I’m fine” is contradicted by slumped shoulders and a sigh offers a level of understanding far beyond a simple lexical analysis. This integrated perception allows for a more nuanced interpretation of intent, emotion, and situational awareness, moving AI from a reactive tool to a proactive, empathic companion that perceives the world much like a human does.

The alchemy of real-time understanding

The true magic of these next-generation AI companions lies not just in their ability to process multiple data types, but in their capacity to do so in real-time. This isn’t about sequential processing where an AI first hears, then sees, then processes language. Instead, it involves the simultaneous ingestion and integration of these diverse streams, enabling an instantaneous, coherent understanding of the user’s state and environment. This low-latency fusion of information is powered by advancements in neural network architectures and parallel computing, allowing AI to perceive and react with a fluidity that mirrors human interaction.

This real-time capability is crucial for fostering a sense of genuine presence and responsiveness. When an AI can immediately synthesize your words, your facial expression, and the ambient sounds of your environment, it can adapt its responses and actions instantly. It moves beyond simple pattern recognition to a deeper comprehension of context, intent, and emotional undertones. This transforms the interaction from a series of discrete commands and responses into a continuous, flowing dialogue, where the AI can anticipate needs, offer relevant suggestions, and provide support that feels genuinely intuitive and connected.

Crafting the personal companion: Memory, context, and empathy

What truly elevates these multimodal AIs from advanced tools to “companions” is their capacity for personalization. This goes beyond customizable settings; it involves the development of a persistent memory and a profound understanding of individual user context. A truly personal AI companion learns and evolves with you, remembering past conversations, preferences, routines, and even your emotional history. This long-term memory allows the AI to build a rich internal model of the user, enabling it to offer increasingly tailored and relevant interactions.

Furthermore, these companions are designed to grasp the dynamic context of your life. They understand your calendar, your location, the people around you, and even the objects within your environment through their sensory inputs. This contextual awareness, combined with a simulated form of empathy, allows the AI to respond not just logically, but also appropriately to your emotional state. While AI does not “feel” in the human sense, it can be programmed to recognize and react to human emotions in ways that provide comfort, support, or appropriate assistance, fostering a bond that feels uniquely personal and deeply supportive.

The economic and societal tremors

The emergence of truly personal, multimodal AI companions is not merely a technological marvel; it’s a catalyst for profound economic and societal shifts. Major tech giants and countless startups are pouring billions into this sector, recognizing its transformative potential across virtually every industry. From enhancing elderly care with proactive monitoring and companionship to revolutionizing education with personalized tutors, and even assisting with mental well-being through empathetic interaction, the applications are vast and varied. This new frontier promises to create entirely new markets and redefine existing ones, driving innovation and investment at an unprecedented scale.

However, this seismic shift also brings significant challenges. Ethical considerations around privacy, data security, and the potential for deep psychological dependence are paramount. The question of job displacement, as AI companions assume roles traditionally held by humans, also looms large. As we embark on this journey, a balanced approach that prioritizes ethical development, robust regulatory frameworks, and thoughtful integration into society will be crucial to harnessing the immense benefits while mitigating the potential risks. Below are some illustrative projections for market growth in related AI sectors:

| AI Companion Sector | 2023 Estimated Market Size (USD Billion) | 2030 Projected Market Size (USD Billion) | CAGR (2023-2030) |

|---|---|---|---|

| Personal AI Assistants & Companions | ~8.5 | ~70.0 | ~35% |

| AI-powered Elderly Care & Support | ~1.2 | ~15.0 | ~40% |

| AI in Personalized Education | ~3.0 | ~25.0 | ~30% |

Note: These figures are illustrative estimates based on various market research projections and may vary.

Conclusion

We stand at the precipice of a new era, one where the long-held dream of truly personal, intelligent AI companions is rapidly transitioning from scientific speculation to practical reality. We’ve explored how multimodal capabilities—allowing AI to see, hear, and integrate diverse sensory inputs—are enabling a richer, more contextual understanding of human interaction. This, coupled with real-time processing and the ability to foster genuine personalization through memory and contextual awareness, is paving the way for AI that doesn’t just respond to us, but truly understands and anticipates our needs. The economic and societal implications are profound, promising revolutionary changes across numerous sectors, from healthcare to education and personal assistance.

This frontier, while brimming with unprecedented opportunities for human augmentation and enrichment, also demands careful ethical consideration and proactive governance. As these sophisticated companions become integral to our lives, questions of privacy, data security, and the very nature of human-AI relationships will require thoughtful navigation. The journey has begun; the challenge now lies in ensuring that this powerful technology is developed and deployed responsibly, shaping a future where AI companions genuinely enhance, rather than diminish, the human experience.